Welcome to the adolescence of AI

Artificial intelligence does not have the cuddliest of reputations. It is either coming for your livelihood or, if movies are to be believed, your life.

https://www.youtube.com/watch?v=XYGzRB4Pnq8

Google, however, has unearthed a new problem: Its AI is too friendly—much, much too friendly.

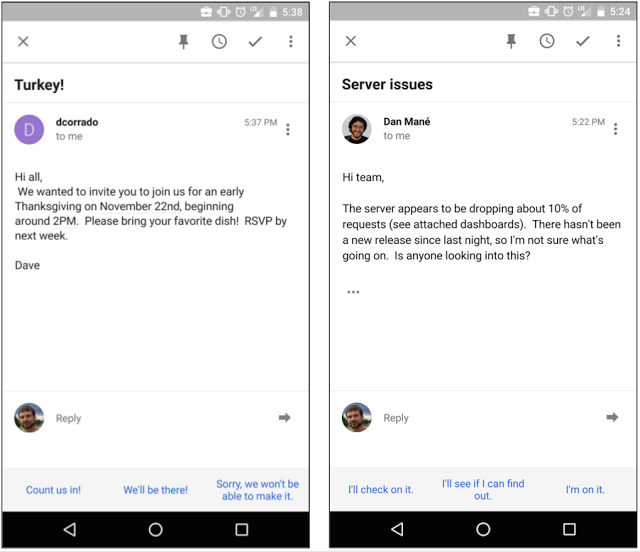

In early November, the advertising (and search, and web) giant introduced “Smart Reply,” a feature in its Inbox app that could automatically reply to basic emails. “Machine learning is used to scan emails and understand if they need replying to or not, before creating three response options,” Wired’s James Temperton explained. He continued: “An email asking about vacation plans, for example, could be replied to with “No plans yet”, “I just sent them to you” or “I’m working on them”.” Or at least that was the theory.

{"@context":"http:\/\/schema.org\/","@id":"https:\/\/killscreen.com\/previously\/articles\/welcome-to-the-adolescence-of-ai\/#arve-youtube-rimkunososi","type":"VideoObject","embedURL":"https:\/\/www.youtube-nocookie.com\/embed\/rimkunOSOSI?feature=oembed&iv_load_policy=3&modestbranding=1&rel=0&autohide=1&playsinline=0&autoplay=0"}

In a blog post last week, Greg Corrado, a senior research scientist at Google, noted that “Smart Reply” was all too willing to respond with “I love you.” As he put it:

As adorable as this sounds, it wasn’t really what we were hoping for. Some analysis revealed that the system was doing exactly what we’d trained it to do, generate likely responses — and it turns out that responses like “Thanks”, “Sounds good”, and “I love you” are super common — so the system would lean on them as a safe bet if it was unsure. Normalizing the likelihood of a candidate reply by some measure of that response’s prior probability forced the model to predict responses that were not just highly likely, but also had high affinity to the original message. This made for a less lovey, but far more useful, email assistant.

This is a technology problem, but it is really a human problem. The most common way for artificial intelligence to become smarter is by emulating human behavior. As Buzzfeed’s Mat Honan documented through parrot-centric chicanery, M, Facebook’s personal assistant is currently largely powered by human contractors. “Before Facebook can make its robot act like lots of humans,” he concluded, “it needs a lot of humans to act like robots.” Similarly, Google’s machine learning taught “Smart Reply” to react to messages like a human does, which, in a vacuum, means saying “I love you.”

{"@context":"http:\/\/schema.org\/","@id":"https:\/\/killscreen.com\/previously\/articles\/welcome-to-the-adolescence-of-ai\/#arve-youtube-8ab7dy2j5am","type":"VideoObject","embedURL":"https:\/\/www.youtube-nocookie.com\/embed\/8aB7DY2j5AM?feature=oembed&iv_load_policy=3&modestbranding=1&rel=0&autohide=1&playsinline=0&autoplay=0"}

If AI is growing towards human knowledge, the “Smart Reply” snafu suggests that it is somewhere in its adolescence, maybe a few weeks before its Bar Mitzvah. And like most teenagers, AI says “I love you” too early and too often. It’s a phase most people grow out of, albeit with slightly less difficulty than passing the Turing Test. So maybe there is hope for your nephew and AI—not necessarily in that order.

{"@context":"http:\/\/schema.org\/","@id":"https:\/\/killscreen.com\/previously\/articles\/welcome-to-the-adolescence-of-ai\/#arve-youtube-yy-if22e3c0","type":"VideoObject","embedURL":"https:\/\/www.youtube-nocookie.com\/embed\/YY-If22E3c0?feature=oembed&iv_load_policy=3&modestbranding=1&rel=0&autohide=1&playsinline=0&autoplay=0"}

Alternately, have you considered responding to every email with “I love you”? Should you do so, and write it up as a piece of low-stakes gonzo journalism, please share said piece.