What is #DeepDream and why is everyone getting so weird with it?

To answer your question, Mr. Dick, yes, androids do dream of electric sheep. Or, at least, artificial intelligence does. And it’s less sheep and more like an insectoid nightmare of sheep as seen through a faint kaleidoscopic filter. We are fascinated and disturbed, Mr. Dick, but the future isn’t quite as horrendous as you might have thought it to be. Not yet.

Look! Here’s another of these strange machine dreams, this time featuring US president Andrew Jackson’s portrait on the $20 bill morphed into an arachnid’s face. Another! This time of one of the most iconic images of 9/11, yet the thick trails of smoke from the twin towers have become … is that? Yes, it seems to be beagles. And look how the sky and clouds have swirled as if thick paint to an impressionist’s thumb. This is what the AI formulates in its dozing mind, Mr. Dick. Is it all you imagined?

///

It’s possible that you (not Philip K. Dick) have seen #DeepDream on the internet somewhere in the past week. I know I have. It’s been making the rounds, that hashtag, and it’s usually accompanied by an image of something. A something that is probably monstrous, and terrifying, and just about everything you don’t want to see when casually browsing social media while sipping on your tea. But hey, it happened, and now you have questions: mostly, what the fuck was that?

As Google put it, it’s a “a visualization tool designed to help us understand how neural networks work.” This will take some explaining so get comfy for a sec and concentrate. Neural networks are used in artificial intelligence to help them learn based on experience. They’re inspired by how our own nervous systems and brains work. So a network is comprised of sets of ‘neurons’ that are all interconnected and will communicate with each other when given an input (usually a high number of inputs) in order to determine what the correct output might be. They’re used most notably in speech recognition and image classification.

an ever-evolving gallery of oneiric visual data.

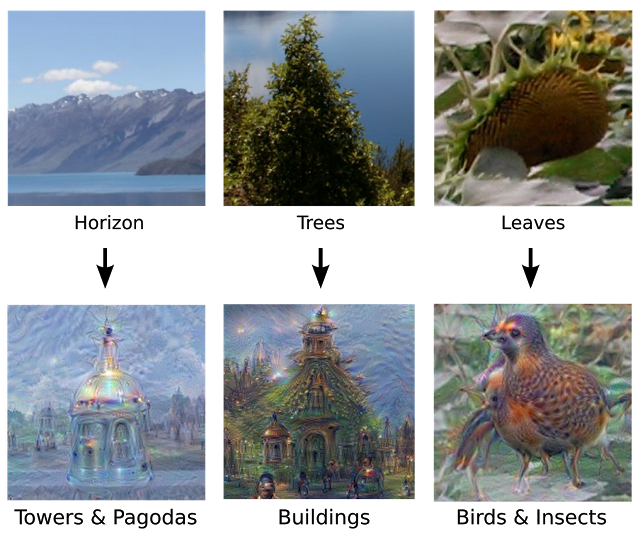

A couple of weeks ago, Google revealed the results of one of its experiments in training artificial neural networks. Let’s say that Google wants a network to learn what a fork looks like, and recognize one in an image. There’s no quick and easy way to do this. The staff will show the network loads of pictures of forks so that it determines a fork is a handle with four tines at the end. Once the network seems to have understood that, a test can then be conducted, which involves having the network generate an image of a fork all by itself. This gives Google’s staff a visual to work with to see how right or wrong the network is, and then tweak its training accordingly.

This is where the fun starts. When generating images, it’s possible to have the network analyze the image you input to determine what features to enhance autocratically, rather than telling it what to do. Google’s engineers explain this best: “We ask the network: ‘Whatever you see there, I want more of it!’ This creates a feedback loop: if a cloud looks a little bit like a bird, the network will make it look more like a bird. This in turn will make the network recognize the bird even more strongly on the next pass and so forth, until a highly detailed bird appears, seemingly out of nowhere.”

It’s like doing a Rorschach test (or ink blot test) except the subject is a computer that likes to draw whatever it sees with maniacal dedication, turning its interpretation into an apparition through virtual art.

This iterative drawing process that the networks perform is what Google calls “inceptionism.” Google’s engineers have been toying around with this as shown in the Inceptionism Gallery. Some of the strangest, most elaborate formations you can see there are generated from random-noise images. The networks are left on their own to see whatever they see inside them, and then constantly zoom in on the image to create an ever-evolving gallery of oneiric visual data.

dogs, slugs, and eyes frickin’ everywhere.

The reason that you might have been seeing these images pop up all over the internet in the past week is due to Google deciding to open source the code on July 1st so that those who know what they’re doing can mess around with it. Before doing so, Google said that it had been wondering whether “neural networks could become a tool for artists—a new way to remix visual concepts—or perhaps even shed a little light on the roots of the creative process in general.” This seems to have been the primary reason for sharing the code, driven by the interest from programmers and artists alike, with the only ask being to use #DeepDream when sharing images so researchers can check them out.

As you can see from the results, the networks that feed #DeepDream are fond of seeing certain types of objects in the images it is fed. This is more than creating pagodas out of mountains or temples out of trees, as Google’s engineers saw. It’s more widespread than that: there are dogs, slugs, and eyes frickin’ everywhere. Show the networks a constellation and it’ll see an orgy of puppyslugs. Slip it some anime high school girls and their faces will grow fur and big wet noses. Most criminal of all: show it a sleeping house cat and, yep, moments later it’ll have dogs for paws and a face.

This doesn’t happen all the time as proven by this image of the NSA building re-interpreted as a smeared stack of polychromatic cars. But it’s a common sighting inside these artificial dream pictures. Either the networks need further training to refine their recognition of dogs, or Mr. Dick’s question needs to be edited for the times: Do Androids Dream of Electric Puppyslugs? Yes. Yes, they do, Mr. Dick.

Header image by Thorne Brandt.