Videogames are creating lighting that moves through the world

This article is part of a collaboration with iQ by Intel.

The history of video gaming is one of creative content being shaped by technical evolutions, from more powerful processors and memory, to more complex methods of control. Even how game graphics handle light has a history.

Steve Manekeller is the Lead Graphics Programmer at CCP, the game designers behind the massive online game EVE Online. He sees the history of this evolving tech in his own game every day.

“Lighting in video games has come a long way since games like Doom or Ultima Underworld. We moved from hand-painted billboards for monsters—no dynamic lighting at all!—to physically accurate lighting. In its 12 years of existence, EVE Online has made it through several different lighting models and just recently released physically based rendering.”

Even how game graphics handle light has a history.

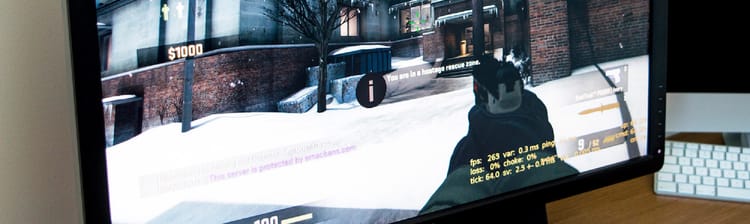

The special effects sequences in Hollywood movies use powerful computers that spend hours using a technique called raytracing to use artificial rays of light to properly illuminate a single image. But video games, with their constantly shifting camera angles and unpredictible actions, have to do things in realtime. This resulted in a simplification of lighting—tricks that made things seem to be properly lit. First areas were painted brighter. Soon static lights with actual directional illumination were used to cast shadows. Effects like artificial reflections (in mirrors or puddles) and lens flare (as in a J.J. Abrams movie) came later. More and more complex systems have been layered on top of each other to create believable visuals. But with the latest generation of gaming hardware, games are moving into representing the physics of how light really behaves.

“Radiosity—the light that fills the world from multiple bounces off surfaces—is notoriously complex to compute dynamically, so we simplify the problem to the point where it is practical to update the bounced lighting dynamically,” says Chris Doran, the founder of Geomerics. “But underneath these assumptions the model is physically correct and does replicate what goes on in real life. This is essential, both for the quality of the final image and the predictability it affords artists.”

Geomerics is the creator of Enlighten, software that helps the graphics engines that power videogames make scenes with complex indirect lighting. The light itself seems to move through space, earning it the title of “volumetric.” With this tech, the scenes and locations in games are becoming more real. On top of simple techniques to provide the appearance of light and shadows and reflections, they are actually mimicking the real world. Light moves through the air and interacts with everything around it convincingly. Flashlights diffuse through fog. Sunlight beams down through dusty air. Light hits the surfaces of objects, bounces off them depending on the material, creating more ambient lighting.

The result? More immersive worlds that are easier to lose yourself in. This makes sandbox games like Grand Theft Auto feel more solid, makes action games like Tomb Raider moodier and more cinematic, and open worlds focused on exploration such as Skyrim just feel real. It feels like more of a place, less of a setting. Games are becoming increasingly realistic, and lighting is an important part of making something that is not just flat graphics on a screen.

More immersive worlds that are easier to lose yourself in.

“The eye is incredibly sensitive to the cues it receives from lighting. Subtle gradients and changes in color affect how the brain perceives the world. The closer you are to replicating this, the better the result,” said Doran. “Dynamic lighting provides for light to drive the action and story. Game artists can now light scenes in the same way that cinematographers light live-action scenes. There is vast potential here that is beginning to be tapped now.”

But what if it isn’t a traditional game? Immersive graphics are even more integral once you are seeing them through the lenses of a virtual reality headset. In 2015, with the launch of Samsung’s Gear VR and eventually the Oculus Rift and Sony’s Project Morpheus, gaming inside a world will become a reality for millions. Moving around in a 3D world you perceive in 3D with light that acts in three-dimensions turns a game into a virtual experience.

Doran said, “We rely heavily on lighting information to make sense of a 3D world. This is going to be a real challenge for virtual reality, as investigators are discovering how crucial it is to not break the illusion of VR.”

“A certain range of games greatly relies on the realism of their visuals, so here it is very important to spend those extra processor cycles modern hardware gives us on new and improved lighting models. But since volumetric lighting is computationally expensive one must be careful and balance it against other needs. So it really depends on the type of your game if you want to use it or not,” said Manekeller.

So in the end, like the rest of gaming’s history, technology will dictate how far game designers and artists can go using realistic lighting. Even with today’s hardware, raytracing and other volumetric lighting is not a given. But, in time, as technology continues to improve, players will regularly bathe in the light of photoreal worlds.