I walk into the AT&T store with the intention of replacing my phone with something “less fancy” (I tell the person who greets me that “I just want something that can send and receive phone calls”) and soon I’m looking at a smartwatch. I haven’t even used a watch since the first grade. In fact, before this moment I’d thought of these things as both totally excessive and maybe recklessly expensive. But, waiting for my name to be called by the phone store guy, I look at the thing and wonder if I could be one of those people who uses a wearable. Maybe I too could join this contemporary phase of the Ray Kurzweil evolutionary trip. I try to imagine myself with an Apple Watch, Samsung Shirt, LG Tooth Fillings, Android Hair, all in sync, harmoniously keeping my spirit auto-updated to the cloud.

The appeal of wearables seems to lie at least somewhat with their sense of a firm companionship. It is hard to be completely lonely if your wrist is gadgeted, if you are at all times present in the swirl of global communication. Even the clumsy act of taking a phone out of your pocket is removed: just lift your arm, and it’s all there: immediate access to the hive mind extended onto the body itself, the abstract and untouchable condensed into a small, physical object.

As each micro-generation becomes more familiar with the attachments we develop for our devices, the more we seem to demand of them, personally. Those of us who grew up alongside the internet understand, instinctively, the depth that can rise up from what is an ultimately one-sided relationship with a machine. The emotions we think we are receiving— the ones we are in turn projecting onto our devices— are complicated by how private they are; private, because we are generating them totally, and complicated because they can sometimes feel as real as human emotion.

We often relate to these devices as if they do have their own sentience. When our cell phones come programmed with an AI who tells us not just where the nearest pizza shop is, but also seems to entertain the basic elements of conversation, these sorts of human/machine relationships are not only encouraged, but normalized, made a part of the texture of contemporary life. We are now used to asking questions to machines, and we expect an answer.

///

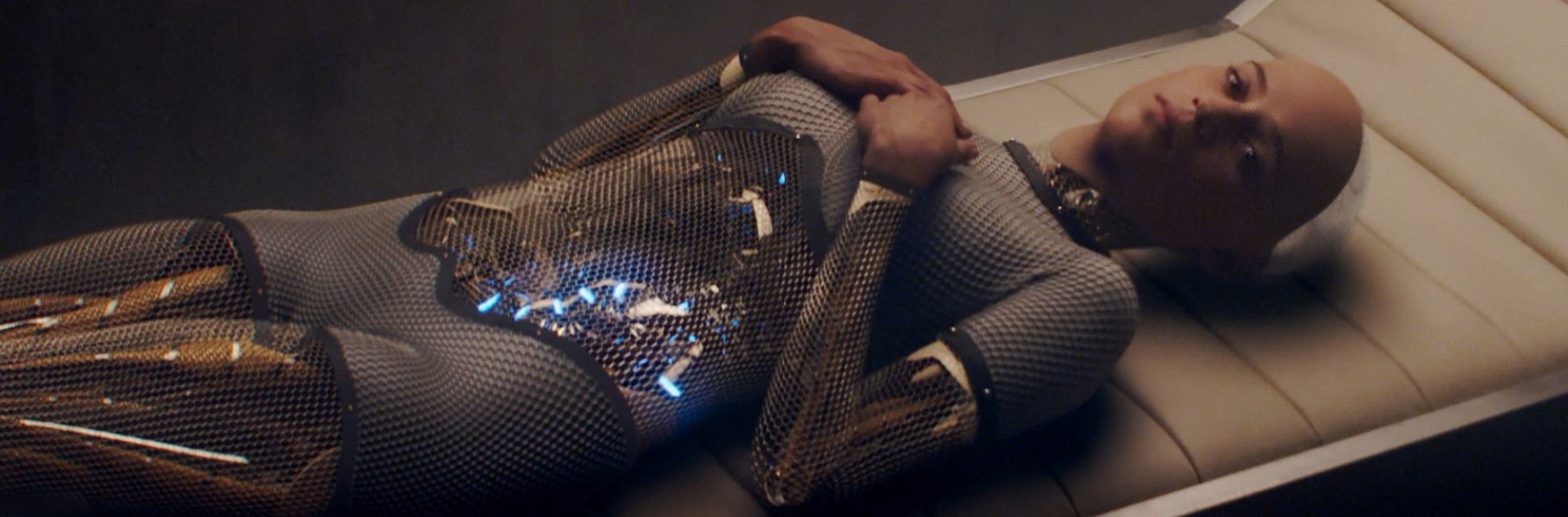

The recent sci-fi film Ex Machina seems initially concerned about this gulf between a human experience of the world and the experiences of an artificial consciousness. Its premise— an introverted computer programmer whisked away to the opulent island home of his frat-guru boss to test the realism of a new human-imitating artificial intelligence— presents itself first as an analytical experience. Ava, the AI, installed into a walking, talking female exoskeleton, is seen in formalized conversation sessions with Caleb, the programmer, who is in turn “tested,” in private, by Nathan, the boss. The question is eventually raised that maybe Ava is, in fact, pushing back against the men, testing their own limits, maybe even manipulating them through her own developing consciousness for personal gain.

Along the way, we, alongside the two men at the center of this story, are encouraged to wonder about the legitimacy of Ava’s view of the world. She is a product of Nathan’s tinkering— the “drafts” of Ava shown in Nathan’s work room stress her reality as a device still in the process of development. As a construct, as software, the question is raised of how much agency she could possibly have—how much of what is seen by the men as a near-human awareness is dependent on Nathan’s programming?

These questions of intellect are eventually overlooked, however, in favor of Ava’s body. As impassive and cranial as Ex Machina’s opening third can seem, it ultimately reveals itself to be not so much interested in artificial intelligence as in artificial physicality—that the route to being human is not through developing the mind, but through giving it a recognizably corporeal form. The film seems to suggest that what is internal can only be secondary to what is visible to the outside world; that having the “right’ body is what gives us permission to be accepted as people.

///

The coming-into-being narrative is popular within AI stories, allegorically suggesting our own, individual journeys toward finding personhood. Ex Machina in particular calls back to Disney’s adaptation of Pinocchio, with its protagonist’s desire to stop being an object and become a “real boy”; and the loud echoes of this in Spielberg’s film A.I., which appropriates the imagery of Pinocchio into a futureshock parable about the responsibilities humans might, or might not, have toward their electronic creations. Both of these stories seem to suggest that it is one thing to place consciousness inside of something, but it is a much more complicated thing to help in developing that consciousness.

Both Pinocchio and A.I. also show humanity as something worth aspiring toward. In these stories, being non-human, being “other,” is undesirable; the characters are missing something essential by living outside the human race. It is less that their inner self (puppet, robot) is bad, but that being a human is so much better. It seduces them, and disrupts their ability to see themselves as legitimate members of society.

When artificial intelligences accept their non-humanness, however, it can often in the context of something dangerous toward humans. The iconic HAL 9000 from 2001: A Space Odyssey is distinguished by an extreme rationality; humans, it is implied, are defined in part by their emotions, their potential to empathize and understand the subtleties between facts. HAL can only understand the world literally, and in the ultra-rational violence he inflicts on the people under his care, he becomes a villain.

The suggestion is that artificial intelligence is dangerous because it can’t feel; if left to their devices, computers will be unable to empathize and may resort to removing unneeded, wasteful elements from the landscape—humans. Our emotions, our capacity for feeling, are what elevates us and what also makes us disposable, and is therefore in need of protection.

A gentler take on this idea is seen in Spike Jonze’s Her. Set in a well-appointed near-future/alternate present, it steps forward as a fable of the contemporary rise of technology-as-companion, the Apple product as life partner, but is at base a warning about where the human heart might be led to by the heartless. Its main character, Theodore, comes into his own by communicating with his pocket AI, Samantha, a super-Siri who appears to begin mirroring his own growing attachment, and love, for her.

Her tries to imagine a world where the borders between human and nonhuman experience are fluid, and where electronic consciousness may have a place as its own minority culture; however, the film still shows its central couple as fundamentally mismatched, two different species with an inability to really understand each other as equals. Samantha, becoming aware of her own identity, eventually leaves human culture (and Theodore) behind to upload herself into a cloud of like minded AIs who are forming a new, non-physical community.

This could be read as an empowerment fantasy, of a disenfranchised life rewriting the terms of their own worth. But on the other hand, the film’s point of view is firmly with Theodore, whose pain over being rejected by Samantha becomes the emotional core of the film. Samantha, and all AIs, are still ‘other,’ and Theodore’s relationship with her is depicted as something of a trespass; Samantha’s rejection of humanity, as well as a physical form entirely, is, consequently, shown as a threat.

///

A minor exchange that occurs in the middle of Ex Machina both shines a light on the trap of its thought experiment while trying to veer attention away from it. Caleb asks Nathan what the purpose of giving Ava a recognizably human/female appearance is, when she could easily just be programmed into a functional, genderless object. Nathan’s response to shrug this off with a “Why wouldn’t I?” But he also draws a problematic conclusion: that our experiences are always gendered, that Ava could not be discerned as a person without also being defined as either male or female.

This idea clarifies the later behavior of Ava, whose ultimate and defining act is to kill her creator and then, by covering her mechanical body in the fake skin Nathan has invented, become, at least in appearance, a human. The image of Ava, now recognizably “nude,” watching herself in the mirror, seemingly happy that she now has a body which makes her a “woman,” defines the film’s perspective. Ava’s earlier, mechanical appearance, though gendered as female, is not good enough, still too un-human; she wants to have the real deal, the skin, the “female” shape, so that when she enters the human world, she will not be seen as the Other.

While making space for appearances like Ava’s final form, the film as a result excludes other ways of appearing and behaving. In the words of Judith Butler:

“How does the materialization of the norm in bodily formation produce a domain of abjected bodies, a field of deformation, which, in failing to qualify as the fully human, fortifies those regulatory norms? What challenge does that excluded and abjected realm produce to a symbolic hegemony that might force a radical rearticulation of what qualifies as bodies that matter, ways of living that count as “life,” lives worth protecting, lives worth saving, lives worth grieving?”

Ava idealizes the human form; her main fantasy, as she reveals to Caleb in one of their confessional dialogues, is to stand at a city intersection and watch humans passing, in a state of banality, simply living in the world. That this is her only apparent interest aside from reflecting Caleb’s own identity and interests back at him is telling. Ava has no clear curiosity about her own identity as a program, as an independent intelligence. Like Pinocchio, her desire is to “become real,” while overlooking that she is already, on different terms, real. Her original form, in Butler’s words, is abject, discarded for the sake of humanity.

But as Ava ascends the staircase out of Nathan’s compound, in a well-tailored dress, makeup, and high-heels, a question hovers around the film. What would her story be like if it was truly about a mind coming into its own, in all its complexity and foreignness? Ava has no opportunity to subvert what was set up for her; the fantasy of escape she eventually gives in to is a human fantasy. Her programming, and her design, is based around mimicking what humans do. But she is not human— and we are never allowed to see what such a consciousness, if allowed to develop, might look like. Only software that imitates one set of human desires. The rest, the deeper part, remains unknowable.

///

The person at the phone store convinced me not to downgrade— the screens on these new phones are so clear, they’ll blow you away, he said. I gave in; I bought the phone with the bigger screen. That night I loaded Netflix on that phone, and watched the beginning of a movie, but ended it early. I had been sick the day before and my mind was somewhere else. I had a list of phone numbers to add into my contacts list, but the task seemed somehow too complicated. I thought about going to the bar, but the endless spring storm was still coming down outside. A wall of rain. I hit the button on the bottom of my phone, and waited for Siri to appear. I asked her, “When will it stop raining?”

She loaded for a while. Then, with some regret, responded: “There’s no weather information. Sorry about that.”

I looked through the screen door at the storm. The little walled-in patio would be flooded tomorrow. I press the button again: “Siri, what should I do tonight?” Quickly, she brought up a list of posts, the top one reading: “help me im so bored.” I press again: “What do you want me to do tonight?” Thunder cracked somewhere nearby as I watched the screen load. I imagine that I feel yesterday’s fever coming back.

“I,” said the program on my phone, “have very few wants.”