Nicole He is a creative technologist whose work lives in the space between video games, physical computing, and witty conceptual art. With experience advising projects for Kickstarter and imagining projects for Google, she’s programmed AI to converse with Billie Eilish for Vogue, physical sensors that help users swipe on a dating app and a Twitter bot that regularly photographs her growing fig plant.

As Nicole embarks on two long-term interactive projects—one is an arts-funded experimental game that uses voice technology, and the other is a commercial indie game—she finds herself more and more interested in immersing herself within the world of games. Here, we speak with Nicole about what distinguishes the industry of video games and that of creative technology, the particularities of one’s voice as a method to activate technology, and how behind every digital project is a living, breathing human.

Listen to this interview on Google Podcasts, Spotify, or Pocket Casts.

FROM ASSISTING TO MAKING

How did you first get into making things with computers?

It was a second career for me. I went to NYU for undergrad, and I studied journalism, but I never wanted to be a journalist. I got a job right after college at Kickstarter. I was doing mostly community management and outreach.

Kickstarter exposed me to many different creative communities in food, art, fashion, technology, and games. My job was to advise people on their projects, to tell them how to present them for an internet audience.

I had been working there for three years, and I was thinking, My job is to help people make things but maybe, I want to make things. Over the years, I had been exposed to this graduate program at NYU called ITP (Interactive Telecommunications Program), which is about creative technology and other weird things. I had some co-workers who went there for grad school. Also, I saw people doing weird shit with technology—really creative, strange things with it. I didn’t set out to become a programmer or anything like that.

At Kickstarter, we had the first Killer Queen cabinet out there in the world. Killer Queen is an incredible 10-player arcade game. It’s also now on Switch, but originally it was an arcade game—a 5 on 5, 10-player cabinet. The problem was the Kickstarter office had two floors. People worked upstairs, the Killer Queen cabinet was downstairs, and we were all obsessed with it. We wanted to play all the time, but the problem was in telling people upstairs that somebody wanted to start a game downstairs. I thought, What we really need is a button that you can push that would notify people to play… It’d be great if somebody made that button. But nobody wanted to [Laughs].

One of my co-workers helped me set up an Arduino and taught me a little bit of physical computing and writing a bit of code. We eventually had this giant red button by the Killer Queen cabinet that you could push. They would tweet out from an account saying that people want to play Killer Queen. Then the Killer Queen-heads at Kickstarter followed that account and got the notification and came down to play.

So that was my introduction to making things with technology. I ended up attending ITP and immediately fell in love with programming. As soon as I got there, I realized that coding was a tool for creative expression, and it felt limitless. I could do anything I wanted with it.

I was always interested in making things that were playful about technology. By being in school and doing many homework assignments, I noticed a thread through my own projects about the themes I was interested in exploring.

There are so many great examples of people for whom a Kickstarter might be their first creative project. It’s de-risking that portion of it because you don’t have to put the entire thing out into the world—it’s all about if you can raise enough interest to manifest what you’re imagining.

My job involved a fair amount of traveling to different creative conferences for people in various creative fields. There’s one that stood out—Eyeo, in Minneapolis in 2014. I remember feeling like my job going there was just to talk about Kickstarter. I wasn’t part of that community, and I didn’t know how to do any of the things that they were doing. The types of things that people were doing there felt like pure magic to me, and also it made me think differently about the things that you can do with computers.

FINDING A VOICE

After ITP, you became interested in voice technology. What was it about the human voice that spoke to you as a creator?

At ITP, a lot of my projects were text-based. A lot of generative text-type of stuff, inspired by Allison Parrish, was one of my professors. When I graduated, I got a job at Google Creative Lab. They brought me on because they were interested in doing some experiments with their voice assistant, Google Home. They told me, You’ve done some stuff with text, and it feels quite related—which is true.

Having the computer generate text is the first step towards having the computer talk to you. When you think about voice technology, you think about Alexa or Siri or Google Home most of the time. That technology is not probably the first thing that comes to mind for a creative coder to use. Because again, it’s very platform-specific for these big companies. My introduction was through that way, though. I quickly found it to be a fascinating medium to make creative work with.

I was interested in the character of a computer, or the character of an AI in people’s popular imaginations. When an AI tells you to do something or says something, there’s this sense of authority that people ascribe to it, because it’s a computer. The thing that’s interesting about voice in particular, is that you can’t help but personify a voice. When you hear someone talking, even if it has a very computerized sounding voice, your brain can’t help but imagine who that is. It starts projecting things onto that entity, and that’s fascinating to me. I felt like there was a lot of creative potential in interactions, games, and talking to that kind of character. I was always interested in what it feels like to speak to a computer.

Do you feel like working with voice has impacted what you hear out in the world?

I definitely pay attention to chatbots, or when somebody says, An AI said this or did this. My experience has definitely made me think differently about what people who say these things actually mean. This is true for probably anybody that works with any type of AI technology. You realize how much human effort goes into this stuff.

It’s basically run by humans—every single part of it—whether the labor is invisible, outsourced, or not, it’s just computer programs run by people. The thing you notice is that when people speak in the abstract about computer programs written by engineers versus AI, they think about those things very differently.

Along those lines, in what ways do you think about how people interact with your work?

Creatively, I’m interested in people’s expectations about technology and how it works. I’m especially interested in the difference between those expectations—which are usually informed by science fiction—and how the technology actually works [laughs]. Knowing people have specific ideas already about the technology that we’re using to make something absolutely goes into my creative process.

Also, when you’re working with voice technology, you’re essentially designing a character. When you make a computer talk, you’re making a character talk, and there are two sides to it. For voice technology, there is text-to-speech, which is the computer talking. Then there’s speech-to-text, which is the human talking, the computer trying to capture what you’re saying, and then doing things in response. Having both of those things impacts the way that people experience a project.

It does not just hear the computer talk; it’s the fact that you use your voice to communicate with the computer. It has a different impact and how we think about the computer that we’re talking to. I’m always thinking about that when I’m doing voice stuff. There are actual technical limitations—voice technology is not that good! The speech-recognition stuff is especially bad. You have to set expectations, and design around that.

I have Alexa in the house. I had to modify my speech to what it hears—I have to think about what I want it to do. I’ve memorized that string of things that I need to tell it, and then that becomes a way that I signal it. You have to be very, very specific.

The opposite is also interesting—as you have said, you have learned how to talk to Alexa. You realize that you have to say things in a certain way for it to understand you. A big part of my projects is also subverting those expectations. I’m interested in designing something that can give the illusion of voice assistants understanding you in a way that people out in the world don’t.

ASKING QUESTIONS WITH AI

You worked with Vogue on an interview project with Billie Eilish and AI. How did you sit down and figure out what you ultimately wanted to do?

Vogue had this idea of doing an AI interview with a celebrity for a while. They had pitched it to various celebrities, and nobody took them up on it until Billie Eilish, and I respect her for that. They had some concept about what they wanted, but they weren’t sure how to do it precisely on a technical level. The AI was trained on all the interview questions that people have asked Billie Eilish over the years. We collected all the publicly-available interview questions that have ever been asked of Billie Eilish. I used that to fine-tune GPT-2 to come up with a list of new interview questions. Then Vogue selected some and asked them to her as the AI.

The other piece is that we also took all the Billie Eilish lyrics and fine-tuned the model to generate new Billie Eilish lyrics. Obviously, these data sets are very, very small. As things go in terms of actual datasets that you would want to use for training machine learning models. The results were predictably weird, which is what they were looking for.

But the result was good because Billie really took it seriously. She answered questions very thoughtfully. At the end of the video, Billie says something like, “I appreciated these questions coming from the AI, because usually when human interviewers ask me questions. First of all, they ask me the same questions over and over…Second, they’re trying to get something from me. They’re trying to get me to say something that would get them clicks or views or whatever, but the AI doesn’t have that kind of motivation, it’s not biased like humans.“

I thought [what Billie said] was incredible because we’re always talking about how AI is biased, which is true. This was an example of the opposite: humans were biased, and the AI wasn’t.

If a real person asked her these questions, it would be weird. One of the questions was, “Who consumed so much of your power in one go?” This would be an odd question for somebody to ask, but because the AI is the one that asked that question, it changes the whole meaning of the entire thing.

Your project, ENHANCE.COMPUTER, has more of an explicit game feel. What was the impetus for that project, and how do you see it fitting into your larger body of work?

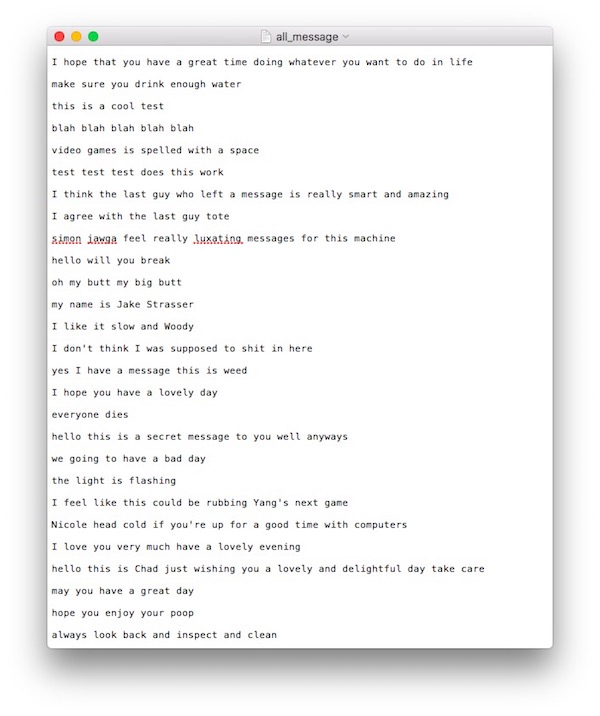

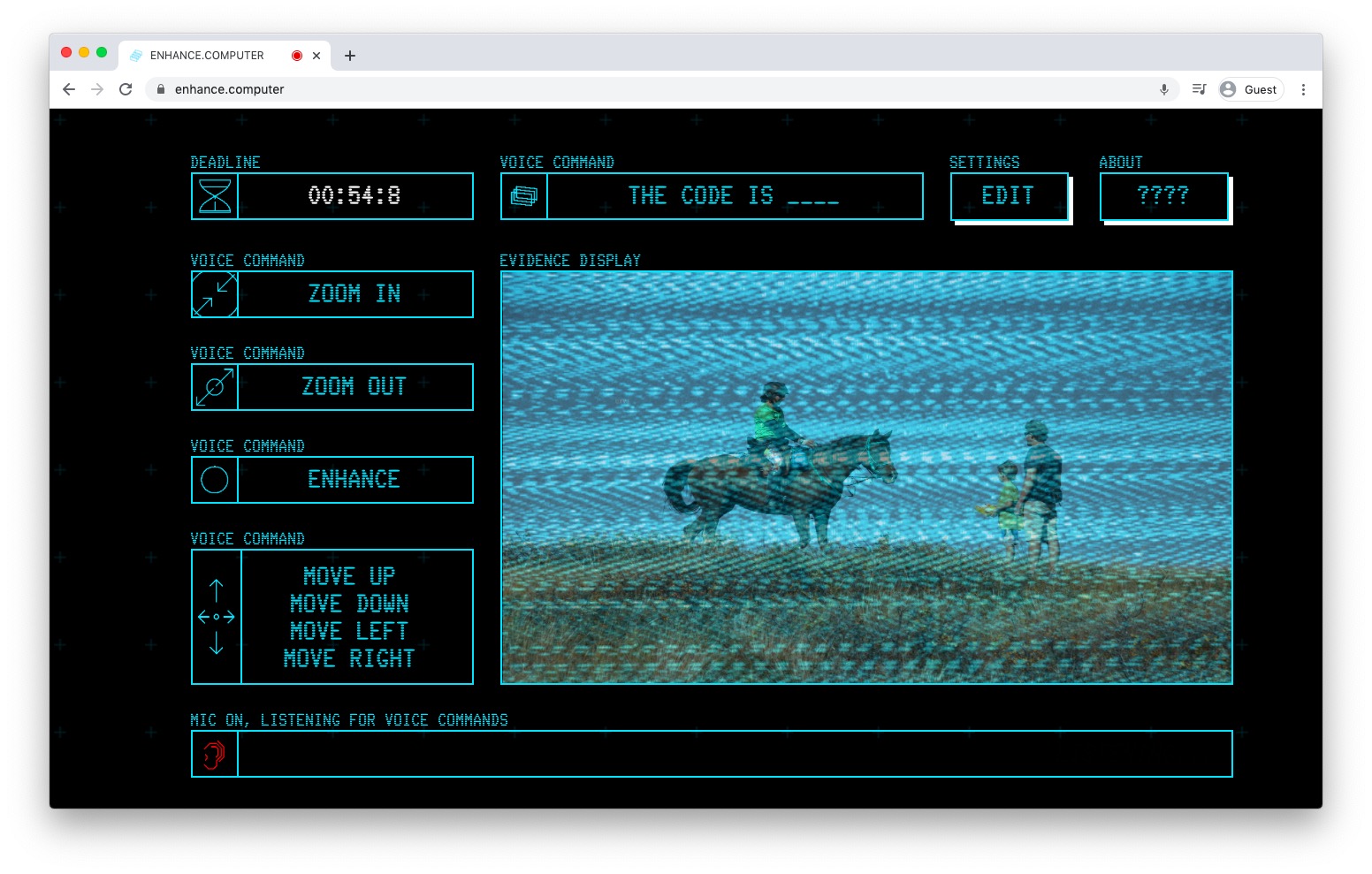

ENHANCE.COMPUTER was a commission for a 2018 exhibition in Melbourne. I had been thinking about that Sci-Fi trope, where people in a movie or TV show are looking at a screen, and they’re like, Zoom in, enhance, then [the computer] just does that. Usually, they’re investigating something. Blade Runner is a classic example, but also police shows do that a lot.

It makes sense why they do it in movies—it’s boring to see somebody clicking around a computer. It makes more sense for somebody to say things out loud for dramatic effect, and it’s funny. There’s an excellent supercut on YouTube of many examples of this. They say, Rotate the image to 180 degrees, and the image rotates—it’s kind of absurd.

It’s a trope people are familiar with. I thought it would be interesting to try to build that program in real life and see what happens. The game is in the browser. It says that you’re a detective, that and someone’s about to commit a crime. You have to look for a secret code in an image and say that code out loud.

What is interesting about that game is that predictably, it doesn’t work that well. Yes, you can win the game—it’s not that hard necessarily. At the same time, you can see the places where speech recognition fails a lot.

That’s the built-in Chrome web speech API. It reveals the distance I talked about between our expectations of the technology we see in movies and how they actually work. This project is in many ways on both sides of my work. One side is my creative technology, voice-centered work, and the other my newer video game work.

You have one project that is an SMS bot that enables people to create texting bots themselves. For this project, you’re building a tool for someone else to create something. How do you see that fitting into your larger body of work?

I would like to do more of that. That project is a Google Doc tutorial that tells you how to create an SMS bot using Google Sheets. Essentially, you don’t have to program your own code. The purpose of it was that anybody—but hopefully, say mutual aid groups or anybody else who needs to communicate with their community and collect information via SMS—can do it without technical resources. The purpose of the SMS bot project explicitly is for other people to use it.

I’ve learned through teaching. I taught a class two years ago at ITP, and I’m teaching it again, starting in a month, about voice technology. It’s called Hello, Computer: Unconventional Uses of Voice Technology. I found a lot of joy in the process of creating examples to teach in class and then having the students take those examples, learn from them, and make their own thing. It is very satisfying.

Much of that comes from definitely more of the creative technology side, which is a lot more basic technical skills and technical stuff. Giving people those tools to make their own thing is great. I guess a lot of that is as simple as open-sourcing.

SWITCHING SIDES, BLENDING PRACTICES

In the past, you had to choose to either be a game-maker or an artist. How do you see game making fitting into your larger creative practice?

There are a couple of concrete reasons why I am focusing on game development now. We have creative technology as one industry, and then indie games as another. The way that people make money is very different, and it affects the things that you make. In creative tech, it’s largely advertising money. A company like Samsung will give you money to make a cool interactive experience for them. Many people who say work as creative technologists do this type of work at studios, or in-house somewhere. You might be trying cool things with new technology that is ultimately meant for a deep-pocketed company for marketing purposes. It’s probably a more stable job than many indie game developers, but it impacts the kind of work you can do. A lot of people have their own artistic practice on the side as well.

When people make indie games, they are making products. There are certainly downsides to that if you want to make money off of a game—you have to make it appealing to people because they’re going to choose whether or not to spend money on it. Also, it’s a risk, in terms of how healthy the working practices are. It’s not necessarily good either, because people will spend years working on something that never makes any money. However, you do have to appeal to the whims of the market when you’re making games. On the other hand, you’re not making advertisements for big companies, so there are different types of things you’re able to do.

One thing I’ve noticed doing creative technology work is that when you make an interactive experience, people look at it and think, Oh, cool, that’s cool. Then they leave. The question is, How do you get somebody to engage with your interactive experience for more than two minutes? I guess you call it a video game, and that’s how you do it [laughs]!

How are you bridging that gap as kind of someone conversant in both of the games and art spaces?

The way I’m doing it is by working on two games—one which is explicitly an arts game that will be free to play. Getting to do that stuff creatively will take many more risks in terms of the things that can go wrong in that game, and unusual things, too.

Versus the commercial indie game that I’m working on—we’re still in the early stages for that—but approaching that very differently. That is going to be a traditional video game. Separating them out into two different projects that have distinct funding, features, styles, and their own audiences is the way that I’m doing it right now.